Relay-BP: The World’s Fastest, Most Accurate Decoder for qLDPC Error Correction Codes

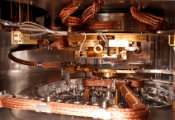

Aug 04, 2025 -- Until recently, the field of quantum computing has struggled to find a decoder powerful enough to fix the errors of a large-scale, fault-tolerant quantum computer in real time. However, a little over a year ago, IBM researchers across multiple disciplines—from electrical engineering to many-body physics—put their heads together and began tackling this challenge.

Last month, they published a preprint detailing their new algorithm, Relay-BP, which achieves logical error rates that are orders of magnitude better than all prior state-of-the-art decoders for qLDPC codes. Furthermore, it is both flexible and compact, making it ideal for decoding quantum memory experiments in an FPGA—a computation device which can be reconfigured after manufacturing. In their paper, the researchers show Relay-BP to be the most performant real-time decoder for qLDPC codes to date in terms of flexibility, compactness, speed, and accuracy. Its arrival marks yet another step in the direction of fault-tolerant quantum computing.

The error correction decoder is designed to detect errors during quantum computation. It is an algorithm implemented on classical hardware attached to a quantum computer, and is part of a larger family of components working together to store and process information, find errors, and correct them in quantum computers. The decoder consumes all of the physical measurements throughout a logical circuit to identify the most probable course of errors. The decoder, along with all the other components, is necessary to achieve fault-tolerant quantum computing.

Finding a good decoder

Quantum error correction (QEC) refers to the methods by which we protect quantum data when storing, transmitting, and manipulating it. Qubits are particularly sensitive to their environment, so we must devise a way to protect them if we want them to maintain their quantum state. If we are able to produce a quantum computer with sufficiently low logical error rates (i.e. with minimal disturbance to our qubits), we can achieve fault-tolerant quantum computing. QEC is an essential part of this mission.

In practice, QEC uses a code that brings together a bunch of unreliable physical qubits to create reliable logical qubits. Due to the laws of quantum physics we cannot extract error information from logical qubits directly, as doing so would destroy their quantum information, so we measure properties of the unreliable qubits to form a kind of "signature" of the underlying error.

IBM_FTQC_Logical-Qubit-3_2K.gifThese measurements are known as a syndromes, pieces of information that give us clues as to what’s causing the error. Then, it’s up to the decoder to decipher the syndrome data and determine how to correct the problem.

A fault-tolerant quantum computer requires a decoder that is flexible, accurate, fast and compact. That is, it must be able to decode a full suite of quantum circuits accurately, it must decipher errors fast enough so that it does not create an ever-increase backlog of undecoded syndromes, and it should only use a small number of computational resources.

Decoders exist in classical computation as well, and classical algorithms such as belief propagation (BP) can shed light on how quantum decoders work. How does it work? BP is what’s known as a message-passing algorithm, as it transmits information between a collection of compute units. Each unit acts like an individual person, and when grouped, they behave as several people talking to one another. With error correction decoding, it’s as though the people are trying to figure out who among them is responsible for a crime—that is, the source of the computational error, which could be from some qubit’s failure or from the act of measuring the qubit itself. Each person holds their own belief about the probability of some person being responsible, and by talking to each other and disseminating their knowledge, they can come to a conclusion and locate the perpetrator.

However, in many cases standard BP struggles to converge to a solution on qLDPC codes, sometimes oscillating between two solutions, and at other times converging to incorrect or uncertain solutions. Until the arrival of Relay-BP, researchers who wanted the most accurate decoder available usually chose BP+OSD, belief propagation working in tandem with another algorithm known as ordered statistics decoding (OSD). However, OSD relies on expensive mathematical calculations, so even though it can be accurate, it is difficult to implement in real-time in an efficient and cost-effective way.

For these reasons and more, Relay-BP outperforms all other qLDPC decoders across the four key metrics we use to judge a decoder’s performance: flexibility, compactness, speed, and accuracy. Flexibility assesses how well the decoder works across different kinds of qLDPC codes. Compactness determines resources used in an implementation on a CPU or FPGA. Speed evaluates the deftness with which it avoids generating a backlog of error syndromes. Accuracy measures its logical error rate.

Relay-BP builds heavily on BP, which on its own is quite fast but not as accurate as Relay-BP. BP+OSD is much more accurate than BP, but falls short on compactness and speed. In their paper, the IBM researchers show that Relay-BP delivers a roughly 10x improvement in accuracy when compared to BP+OSD, while maintaining and in some cases improving upon the speed of BP. Relay-BP showed similar performance improvements over a range of leading alternative methods the research team examined.

This means that, to our knowledge, Relay-BP is the only real-time qLDPC decoder that hits all four nails on the head. It is not only flexible and compact, but also faster and more accurate than all known alternative methods.

Müller began at IBM as a firmware developer for quantum computers, and eventually became interested in error correction after working on a firmware project for a piece of error correction hardware. When given the opportunity to work in the field, he accepted without hesitation—and with a team of error correction experts by his side, Müller helped make dreams of a fast, efficient, accurate, and compact QEC decoder into reality.

“I’m doing fundamental research, but then seeing it turned into a product with my colleagues sitting in the room next to me. It’s a kind of luxury that not many people get to experience. It’s almost like a composer hearing their symphony performed by an orchestra for the first time,” said Müller.

Shortly after beginning his work on this new QEC decoding algorithm, Müller was able to devise a highly competitive version. He achieved this feat by turning his focus away from OSD, and towards BP alone.

Müller started by exploring modifications to BP from previous work in the field, focusing on those which seemed to be cheap and impactful. “Basically, I went bargain shopping,” He said. “I took all the cheap bits and tried to amalgamate them together.”

”The result was Relay-BP—something faster and more accurate than existing decoding algorithms for quantum LDPC codes while also being cheaper and easier to implement. From there, the team worked tirelessly to refine the new algorithm, looking for practical applications in a broad variety of scenarios. Their efforts were essential to the final realization of Müller’s idea.

The solution

What is it about Relay-BP specifically that makes it better than its predecessors? One important factor lies in its name: "relay." The algorithm's parameters can use error information from earlier in the message-passing tree to update their beliefs. Ultimately, this allows the algorithm to converge on a final error belief—and thus a correction—at a faster and more accurate rate.

Standard BP generally doesn’t use parameters, meaning every compute unit typically remembers and transfers information in the same way. It uses a global, uniform rule to determine how much weight to assign each message. It’s kind of like trying to figure out whether a rumor is true, where you collect information from several sources and you treat all sources as equally credible.

Relay-BP effectively adds knobs to the message-passing algorithm, letting us control each node’s memory strength—how much it remembers (or forgets)—and thus how it updates its beliefs based on whatever message it receives from its neighbors. One can describe the “memory transience” and “disorder” of Relay-BP as introducing variation in the credibility of each source as well as variation in the “forgetfulness” to messages each node has heard before. This plays a big role in making Relay-BP faster and more accurate than all prior decoders for qLDPC error correction codes.

Adjusting memory strength is not a new thing in the world of optimization; it is known to help some algorithms converge on a solution. However, when all memory strengths are the same, or "symmetric," the algorithm can get stuck in what we call “trapping sets”. Müller introduced the idea of disordered or "asymmetric" memory strengths, to deal with this problem. With disordered memory strength, it becomes easier to converge on an error solution because each node experiences different local constraints. The ability to control the memory strength—and thus, its disorder—was absolutely critical to Relay-BP’s effectiveness.

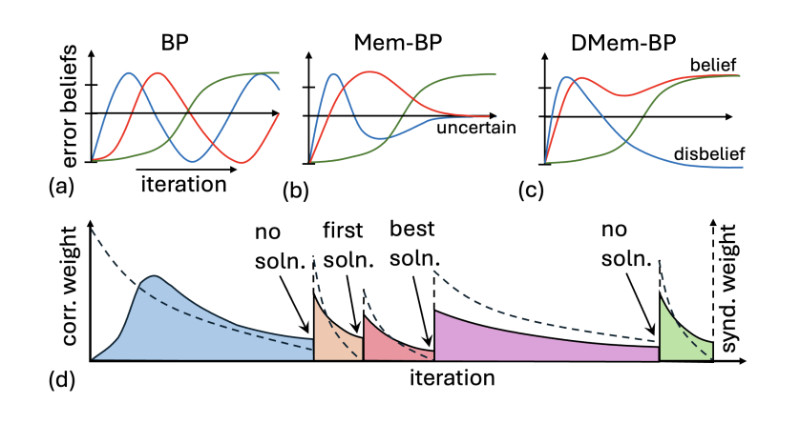

figure1.pngFigure 1 from the Relay-BP arXiv paper. (a) In BP, the belief that each error occurred is up-dated over each iteration. However, some beliefs can oscillate (red, blue) instead of converging (green). (b) A memory term can dampen oscillations, but symmetric trapping sets may lead to convergence to uncertain beliefs (red, blue). (c) Disordered memory strengths can break symmetries, leading to decisive beliefs forming a valid solution (i.e. the syndrome is canceled). (d) Relay-BP chains together different DMem-BP runs, which can further aid convergence and provide multiple valid solutions without restarting. Solid lines indicate the weight of the proposed correction while dashed lines indicate the syndrome weight after the proposed correction.

What’s more is that, in this asymmetry, Müller discovered that memory strength could become negative; this turned out to be quite useful. If the algorithm believes it has found the best solution, but this belief is incorrect, the negative memory allows it to forget its solution and look for a different one instead. Ultimately, this increases the likelihood that it will land on the true best solution. This “forgetting” power offered by the negative memory strengths also helps if we do not find a solution at all. This discovery of negative memory’s value was completely accidental, said Müller—it emerged out of a coding oversight.

Interdisciplinary thinking leads to innovation

Müller came from a background of many-body physics, where problems involving memory strengths are not uncommon. With this insight, he noticed how adjusting memory strengths and negative memory impacted the algorithm, something which may not have happened had he not been there.

Beyond Müller, the team includes researchers with backgrounds in software development, systems, condensed-matter physics, number theory, and more—underscoring the importance of interdisciplinary thinking in a burgeoning field like quantum.

This gives us a sense of what it will take for the world to realize quantum computing more broadly—a culture of learning. “It’s hard to find people who know about physics, error correction, and electrical engineering, but those are the people we needed,” said Müller. And at IBM, broadening one’s horizons has become part of the culture of the quantum computing team. “It’s cool to see how every one is diving in to learning something new.”

Where do we go from here?

The IBM team is ramping up efforts to produce a real-time decoder system and eventually test their decoders under real device noise. Testing could start as early as 2026 with Kookaburra, and the team expects that Relay-BP will play an important role in that research demonstration.

“This is the point where we want to go from saying we have an algorithm to saying we have an efficient hardware implementation of this algorithm,” said Blake Johnson, distinguished engineer and quantum capabilities architect at IBM Quantum. “Relay-BP may not be the final version that gets used in Starling, but it’s part of the larger process of scientific discovery.”

Relay-BP brings us one step closer to the promise of fault-tolerant quantum computing, but real-time decoding remains a challenge. The present study focuses on error correction in quantum memory—our ability to keep qubits in a stable state for an extended period of time—rather than quantum processing.

One particular roadblock is that the decoding hardware available today is not compact enough for the kinds of logical operations (i.e. quantum processing) we want to perform. As problems become larger, they become more difficult to scale appropriately, hence why compact hardware is important. However, the team is already exploring modifications to the decoder to address these concerns, and is confident in their ability to deliver a solution capable of decoding for the fault-tolerant architecture promised by the IBM Quantum Roadmap. Relay-BP is one step along the way to bring useful quantum computing to the world.